I’ve been gradually updating the WordPress theme that powers this site with the help of a very talented designer and thinker, my friend Bec Worth.

It began with conversations about overhauling her own site. She had a few disparate Tumblrs with a ton (and I really do mean a ton) of great references, photos, and more that had accumulated over the years. All of them had fallen in to disuse for one reason or another, but she still felt like some sort of outlet for collecting these sorts of snippets and longer-format writing would be really useful. She brought up the Commonplace book as a particular inspiration. I’d never come across it before but it really resonated.

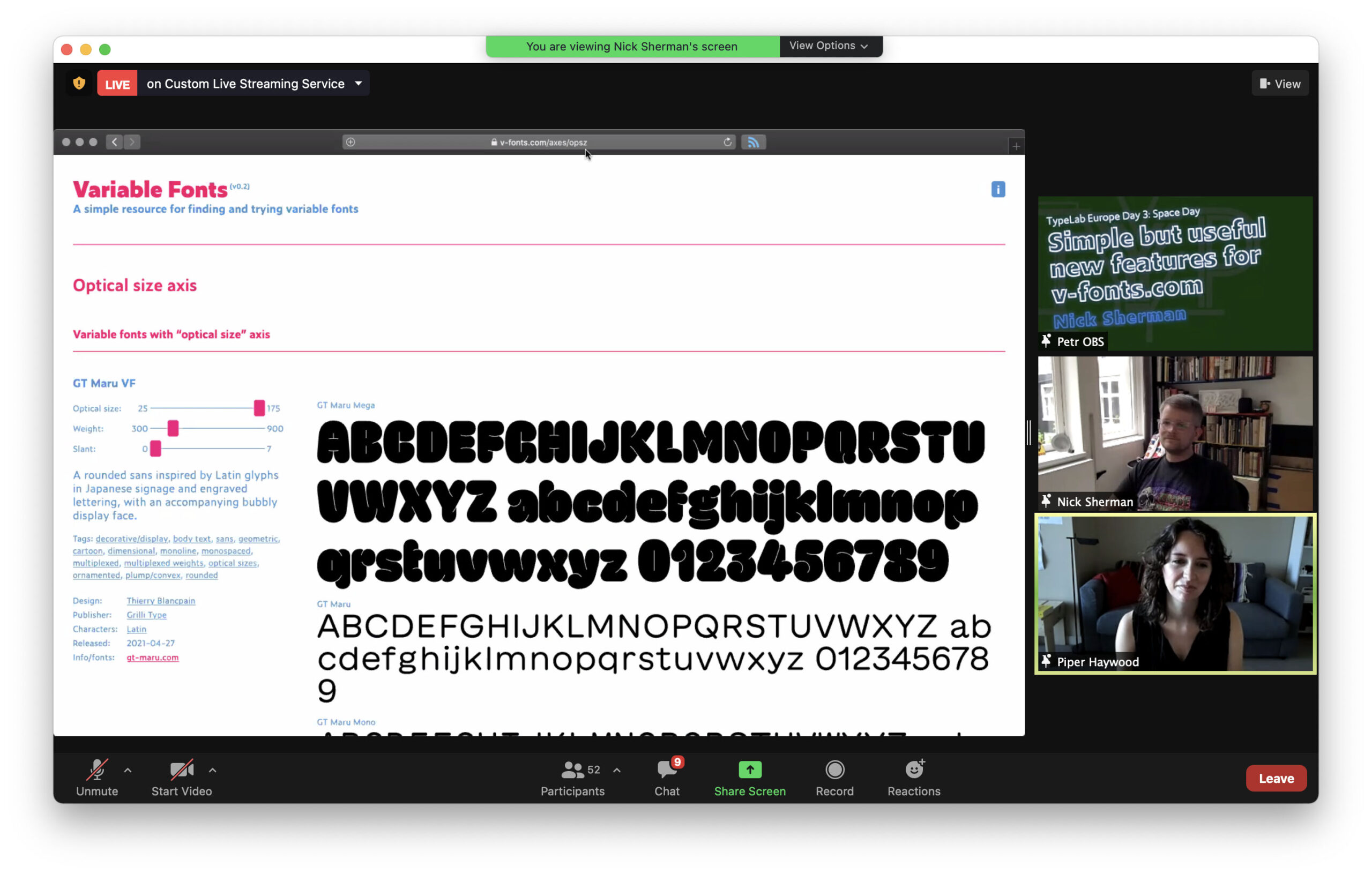

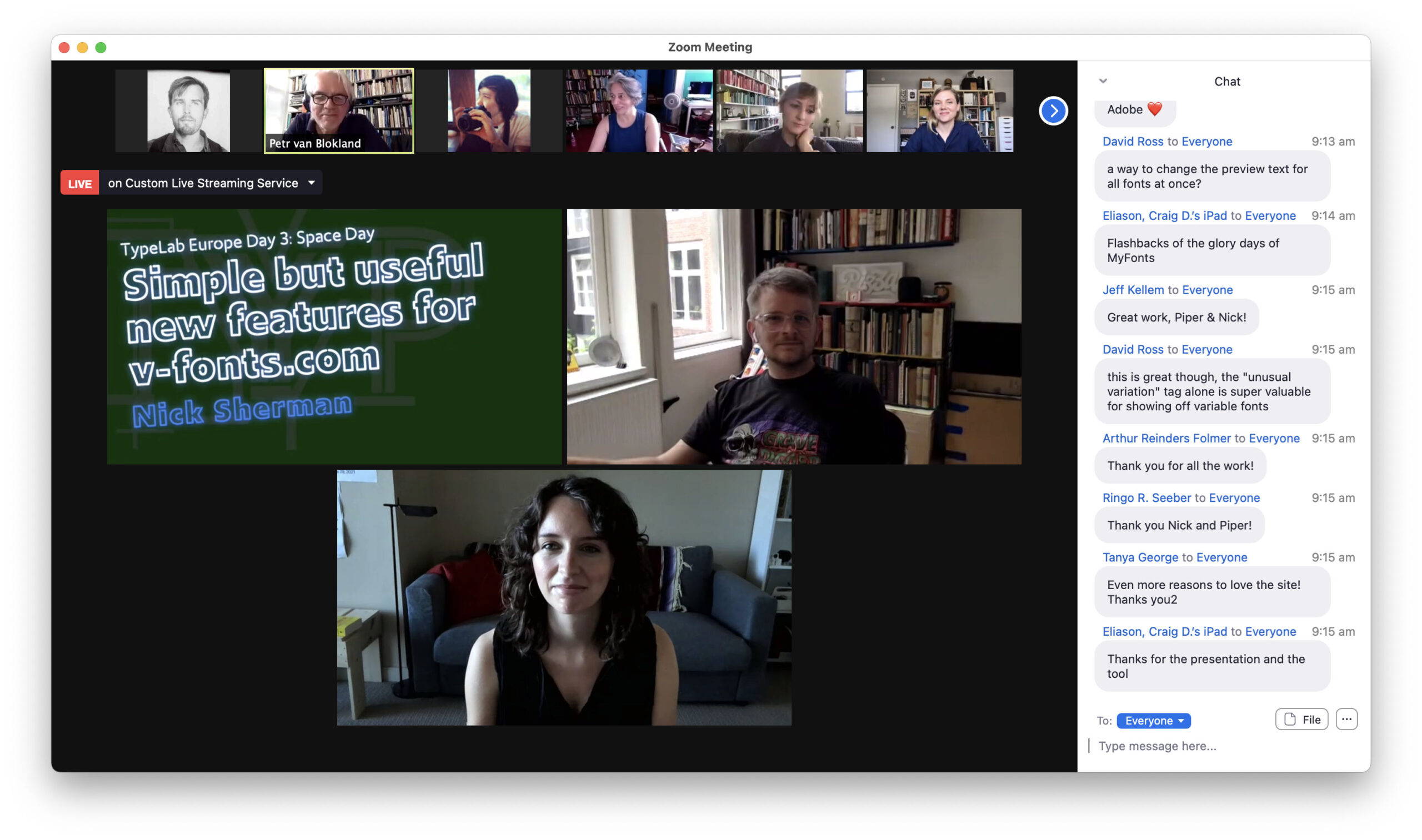

We continued talking about her site, and I started to restructure my old color-heavy Notebook theme (view in Wayback Machine) to strip out the less necessary functionality, improve the accessibility, etc. I wanted to make it something that could be more widely useful to not just me and Bec, but others as well. The early version of this new theme used variable Work Sans (view in Wayback Machine)

She liked where it was going, so we got her set up on a WordPress instance and used the Tumblr importer to pull in all of that old content. Since then, we’ve been using her log and my site to test out ideas and continue pushing the idea of what a Commonplace Book could be on the web. For more along these lines, I recommend reading her post “What would a Commonplace Book feel like on the web?”

What’s next

It’s far from finished. The type is nowhere near as tight as Bec’s designs, I need to spend a bit more time on that! Amongst other things, I need to clean up the table of posts, add a thumbnail view, and improve the gallery block styles. We’re also going to figure out a way of highlighting work and other projects, something that draws a bit more attention than normal posts.

And color! We’d like to make it possible for people to select preferred text colors, maybe on a post-by-post basis or per category. Color is tricky though, I’d like to preserve some baseline of legibility and I’m not sure how much I could do as the developer to enforce that. Also, how do we handle this if we introduce dark mode support? The HSL or LCH color spaces might be helpful.

I’m not planning to submit this to the WordPress theme directory. Right now, this means that installation and updates are pretty manual, the theme has to be uploaded via FTP before it can be installed. Because of that, I’ll eventually set up an update server so that anyone using the theme can perform one-click updates from the WordPress admin area. Note to self: see this article for more on how to do this.

Realistically, people using the theme might want to change up certain aspects of the theme to be more “them”. Instead of adding a ton of theme options like font pickers and that sort of thing, I’d like to encourage people to tinker with it themselves. This is going to require a bit of documentation to point people in the right direction. I’ll probably start with how someone with little-to-no CSS experience could go about changing the font (i.e. upload font files in the Media library then add the necessary CSS lines in the Customizer, or setting up a child theme).

Clearly, it’s a work in progress!

But anyone is welcome to give it a try for themselves. I recommend it if you’ve been looking for a place to keep important references or get thoughts out of your head. Head to the commonplace-wp-theme GitHub repository to download it and read a bit more.

If you do end up using it, we’d love to know.